DeepMind, Google’s AI lab, has a history of showing off the capabilities of its AI through games — and walloping human opponents in the process. In 2016, AlphaGo defeated Go world champion Lee Sedol. In 2019, AlphaStar constructed enough additional pylons to beat professional StarCraft II player (yes, that’s a thing) Grzegorz “MaNa” Komincz by 5-0. And in 2020, Atari57 scored better than an average human player across 57 Atari 2600 games.

The lab’s latest AI news is something different, though. Instead of designing a model to master a single game, DeepMind has teamed up with researchers from the University of British Columbia to develop an AI agent capable of playing a whole bunch of totally different games. Called SIMA (scalable instructable multi-world agent), the project also marks a shift from competitive to cooperative play as the AI operates by following human instructions.

But SIMA wasn’t created simply to help sleepy players grind out levels or farm up resources. The researchers instead hope that by better understanding how SIMA learns in these virtual playgrounds, we can make AI agents more cooperative and helpful in the real world.

Choose your own AI adventure

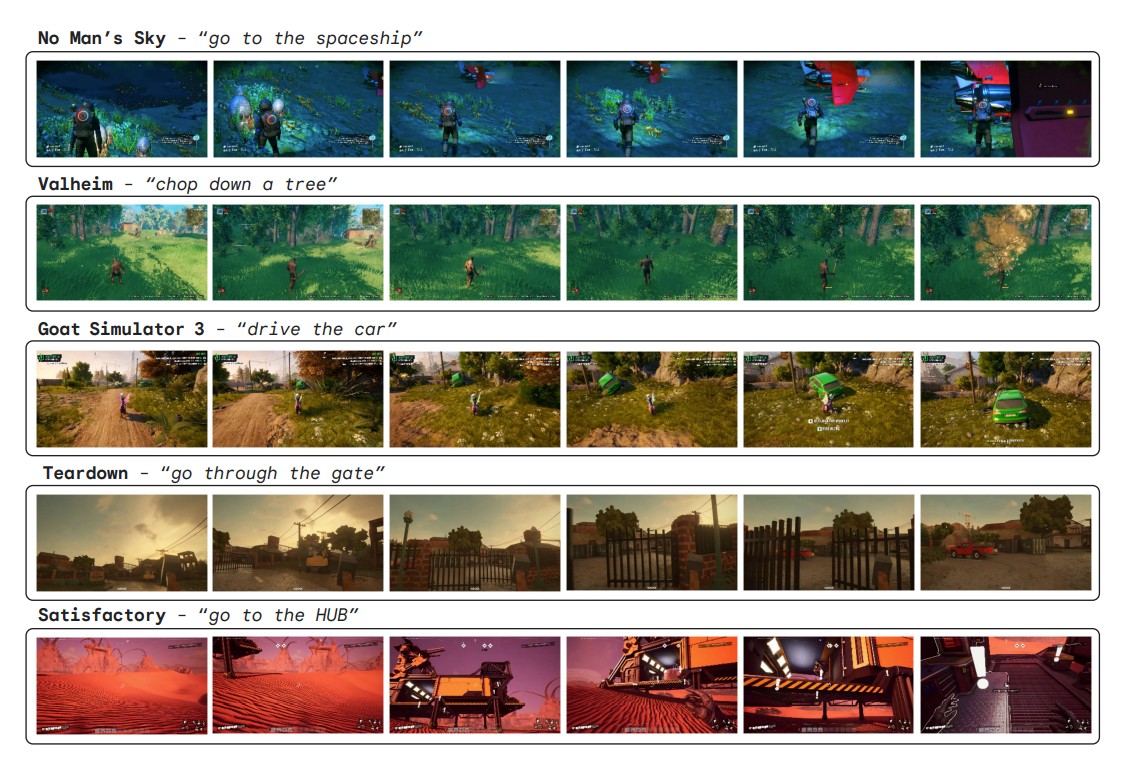

Working with developer studios, DeepMind trained and tested SIMA across nine different video games. These included the enormous (if sometimes tedious) space exploration game No Man’s Sky, the Viking survival game Valheim, the sci-fi factory-builder Satisfactory, and even Goat Simulator 3 — a game in which players play a goat spreading mayhem and hijinks across a fictional Southern California city.

That is a diverse portfolio of games, but these nine weren’t chosen because DeepMind fears a future in which only an AI can protect us from extraterrestrials and jetpack-ing goats. Rather, these games share characteristics that are analogous to the real world in important ways: They take place in 3D environments that change in real time, independently of player actions. They also feature a range of potential decisions and open-ended interactions, beyond simply winning.

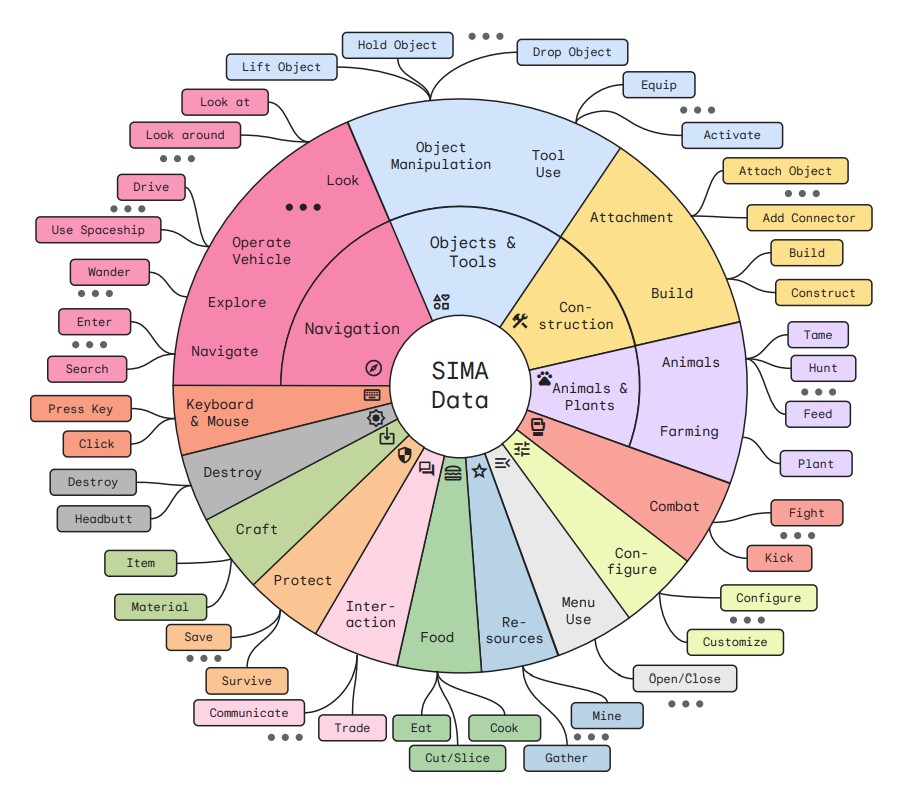

To teach SIMA how to move around these worlds, the researchers trained the AI to comprehend language-based instructions, starting with simple gameplay videos. In some of the videos, two players played a game together. One player would provide instructions, and the other player would follow them accordingly. In other videos, players played the game freely, and the researchers later annotated the videos with written instructions describing the on-screen action. In both cases, the goal was to capture how language tied to the behaviors and actions in the game.

To ensure that SIMA’s skills could transfer to any 3D environment — not just the ones it trained on — the researchers imposed some additional design challenges. These included:

- Environments weren’t slowed down so SIMA could leisurely determine its next action. It had to operate within the game at regular speed.

- SIMA only had access to images and text on the screen, the same information at a human player’s disposal. It received no privileged information from, say, the game’s source code.

- SIMA could only interact with the game using the equivalent of a keyboard and mouse.

Finally, SIMA’s goals in the game had to be provided by a human player in real time using natural language instructions. The AI wasn’t allowed to simply play the game over and over until it mastered a specific, predetermined goal. In other words, SIMA had to learn to play cooperatively with its human partners, not from trial and error over thousands of attempts at the game.

For instance, if the most recent in-game objective in Valheim was to collect 10 lumber, but a human user told SIMA to collect 10 rocks instead, SIMA should start mining a nearby boulder, rather than cutting down trees.

“This is an important goal for AI in general,” the researchers note, “because while large language models [LLMs, like ChatGPT] have given rise to powerful systems that can capture knowledge about the world and generate plans, they currently lack the ability to take actions on our behalf.”

Jack of all trades, mastery to come

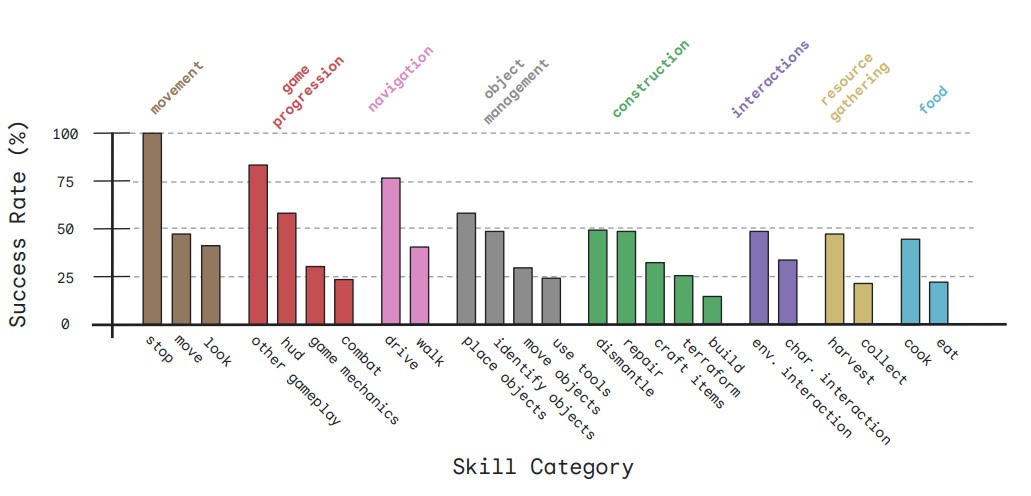

SIMA was evaluated across a total of 1,485 unique tasks spanning nine skill categories. The skill categories were more generalized across the games — things like navigation, construction, and using objects — while the tasks themselves might be more specific to the game environments — for instance “go to your ship,” “build a generator,” or “cut that potato.”

Overall, SIMA successfully understood language-based instructions. Though its success rate varied across the tasks and skills categories, it performed significantly better than the other AI agents used in the evaluation.

For example, it outperformed AIs trained in a single environment, demonstrating an average improvement of 67%. The researchers also trained some versions of SIMA on all of the data sets except for one. When these versions of SIMA played the absent game, it performed almost as well as the single-environment AIs. These results suggest that SIMA’s skills are transferable across different 3D environments.

The researchers also pitted SIMA against an AI agent trained without language inputs. In No Man’s Sky, for example, SIMA’s success rate averaged at 34%. The other AI averaged only 11%, a performance the researchers describe as “appropriate but aimless.” These results further suggest that language is a vital component of SIMA’s performance.

“This research marks the first time an agent has demonstrated it can understand a broad range of gaming worlds, and follow natural-language instructions to carry out tasks within them, as a human might,” the researchers note.

With that said, don’t expect SIMA to be helping you beat a particularly tricky game anytime soon. In No Man’s Sky, despite strict judging criteria, human players achieved a success rate of 60%.

Similarly, because these games are complex — often combining dozens of potential interactions with hundreds of objects in a given moment — the researchers limited instructions to those that could “be completed in less than approximately 10 seconds.” It remains to be seen if a future iteration of SIMA can manage the long-term, multi-layered plans your average group of middle-school Minecraft players manage every Saturday night.

The team published their findings in a technical report.

Thinking beyond the game

SIMA is a work in progress, and looking ahead, the researchers hope to expand their game portfolio to include new 3D environments and larger data sets in order to scale up SIMA’s abilities. Even so, they find the preliminary results promising.

“Learning to play even one video game is a technical feat for an AI system, but learning to follow instructions in a variety of game settings could unlock more helpful AI agents for any environment. Our research shows how we can translate the capabilities of advanced AI models into useful, real-world actions through a language interface,” they write.

If we want AI to exist and operate in the world — whether in the form of cars or robots — then virtual environments present a safer, less wasteful means for researchers to test these systems.

If an AI crashes a car learning to drive in a virtual world, it can try again after lessons are learned. Crash a car in the real world, and even non-fatal consequences are far more costly. Once trained, the same language instructions could be used to direct the AI outside of the virtual setting — potentially even if it was trained as a goat.

“We believe that by doing so, we will make SIMA an ideal platform for doing cutting-edge research on grounding language and pre-trained models safely in complex environments, thereby helping to tackle a fundamental challenge of AGI,” the researchers conclude.