What are the odds that AI will kill humanity by 2100? That was one of the many questions asked in the Existential Risk Persuasion Tournament, held by the Forecasting Research Institute. The contest collected predictions from technical experts and “superforecasters” (people with a track record of making accurate predictions) about various existential threats — ranging from AI to nuclear war to pandemics.

The threat of AI was the most divisive topic. AI experts said there is a 3% chance that AI will kill humanity by 2100, according to their median response. Superforecasters were far more optimistic, putting the odds of AI-caused extinction at just 0.38%.

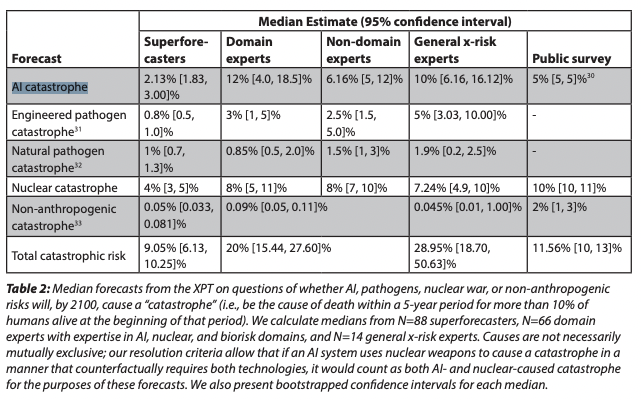

The groups also submitted predictions on the likelihood that AI will cause a major catastrophe, even if it doesn’t wipe us out entirely (defined as causing the death of at least 10% of humans over a 5-year period).

The median prediction of AI experts was a disturbingly high 12%. The superforecasters again put the odds far lower, at 2.13%. (It’s worth noting that the Existential Risk Persuasion Tournament was held from June to October, just before OpenAI released ChatGPT in November 2022.)

Is there any reason to trust one group over the other?

The Existential Risk Persuasion Tournament

The report from the Forecasting Research Institute says that the tournament wasn’t designed to answer that question, but rather to start collecting specific predictions on both short- and long-term questions.

The goal is to see whether there’s a connection between the two: Do superforecasters who make good predictions on short-term events also make good predictions about events 50, 75, or 100 years from now? It’s an open question.

To collect predictions about high-stakes existential risks, the Forecasting Research Institute asked a total of 169 superforecasters, AI experts, and experts in other fields to predict the likelihood of different disasters occurring over various timeframes: 2024, 2030, 2050, and 2100. The participants submitted predictions individually and in groups, and were offered incentives, including cash prizes, to encourage engagement.

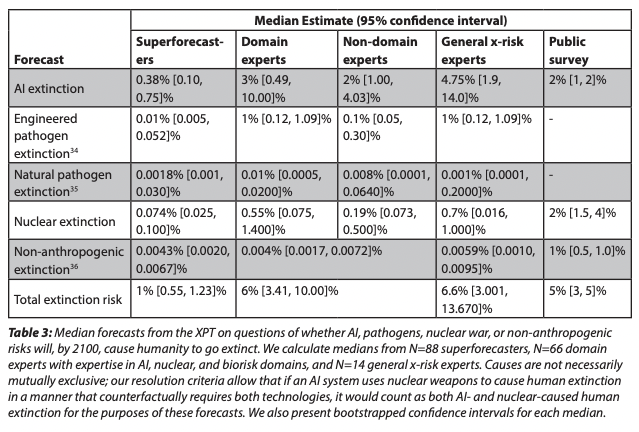

Although predictions ranged widely and there was disagreement within the groups, the experts were generally more concerned about all kinds of existential risks. In total, experts predicted a 6% chance of human extinction by 2100. Superforecasters put the odds at 1%. Experts predicted a 20% chance of a catastrophe this century, while superforecasters estimated less than half of that (around 9%).

You might assume that subject matter experts are always better are predicting at the future than people outside their field, but that isn’t always the case.

Tracking predictions over time

Nobody can see the future. But in recent years, the field of forecasting research has been trying to get a best-guess view by developing methodologies designed to measure and improve the accuracy of human predictions. The basic idea is to have people submit specific, quantifiable predictions about future events or trends, record the outcomes, and track who is consistently getting it right. People with a knack for making good predictions are called “superforecasters.”

Superforecasters don’t necessarily have subject-matter expertise on the question at hand. But what they do have is a general toolkit for how to think about probabilities, and a proven track record of making correct predictions about short-term questions. They are often even more prescient than experts.

That’s one key finding from research conducted by psychologist Philip Tetlock, the chief scientist at the Forecasting Research Institute. In studying forecasting and decision-making, Tetlock has found that experts — especially those who make money predicting things in their field, such as by appearing on TV or charging consulting fees — are often no more accurate in guessing the future than people in unrelated fields.

“In this age of academic hyperspecialization,” Tetlock wrote in his landmark 2005 book Expert Political Judgment, “there is no reason for supposing that contributors to top journals — distinguished political scientists, area study specialists, economists, and so on — are any better than journalists or attentive readers of The New York Times in ‘reading’ emerging situations.”

Tetlock put his claim to the test in 2011 when the Intelligence Advanced Research Projects Activity (IARPA) launched a prediction tournament designed to identify the best ways to predict geopolitical events. Participants included U.S. intelligence analysts with access to classified data.

Superforecasters, with no relevant domain expertise, also joined the contest. They were selected through the Good Judgment Project, led by Tetlock and Barbara Mellers at the University of Pennsylvania, which aimed to assemble a team of forecasters whose decision-making process tends to be less affected by cognitive biases.

The outcome was surprisingly one-sided: superforecasters beat the experts for four straight years.

The superforecasters beat the experts by a decisive margin across four tournaments.

What explains the superforecasters’ edge? One explanation is perverse incentives. For example, experts might earn more money or attention by making bold, but not necessarily sound, predictions, or they might face social pressure to agree with the consensus of their colleagues.

Another explanation centers on the counterintuitive pitfalls of being a specialist: Knowing a ton of information about a narrow topic might lead experts to become overly attached to particular frameworks or theories, or to develop confirmation bias.

In contrast, savvy generalists (read: superforecasters) often have a clearer window into the future because they aren’t committed to the importance of any particular theory or field. Tetlock’s research on superforecasters suggests their predictive edge comes from intellectual humility, an analytical and probabilistic mindset, an ability to synthesize information from various sources, and the willingness to revise their predictions in light of new evidence.

Of course, it isn’t clear whether Tetlock’s findings about expert predictions from the fields of intelligence, geopolitics, or social science are generalizable to forecasts based on expertise in other fields, such as biology or AI.

The reliability of long-term forecasting

Capturing how experts and superforecasters are thinking about existential risks was one goal of the 2022 Existential Risk Persuasion Tournament.

Another goal was to begin collecting long-term data to determine whether people who make good short-term predictions are also good at forecasting the distant future. If empirical evidence shows this is the case, policymakers might be more interested in incorporating superforecasted predictions when making decisions with long-term impact.

Time will tell.

“It will take 10-20 years before we can even begin to answer the question of whether short-run and long-run forecasting accuracy are correlated over decades,” the report from the Forecasting Research Institute concludes. “For now, readers must decide for themselves how much weight to give various groups’ forecasts.”