This article is an installment of Future Explored, a weekly guide to world-changing technology. You can get stories like this one straight to your inbox every Saturday morning by subscribing here.

It’s 2028, and a “crazy” bet some Harvard dropouts made just a few years ago is already paying off. Their idea: a brand new kind of microchip that will shift the ground beneath our massive AI economy.

AI chips

Microchips are the backbone of our electronics — using tiny components called “transistors,” they control the flow of electric signals in ways that allow our devices to process and store information.

The design and organization of a chip’s components is called its “architecture,” and certain ones are particularly well suited for certain applications — graphics processing units (GPUs), for example, have architectures that let them process images more efficiently than central processing units (CPUs).

GPUs are also well suited for training and running generative AIs, but to meet the power demands of these systems, we may need brand new chip architectures that allow them to process data more efficiently.

To find out what these AI chips could look like, let’s explore the history of microchips, the company dominating the market right now, and the chip startup with a “crazy” idea to take it down.

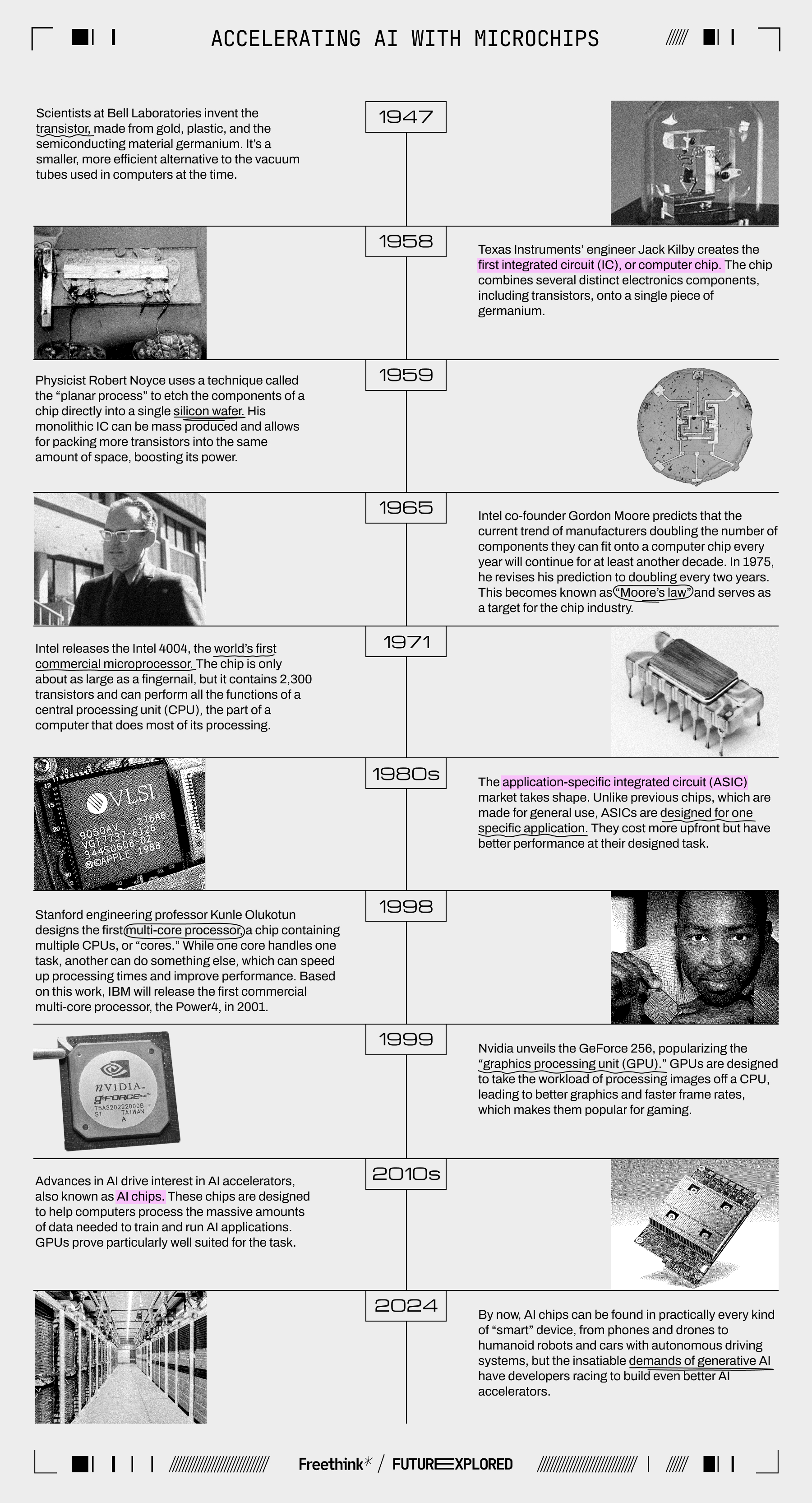

Where we’ve been

Where we’re going (maybe)

To say the AI chip market is hot right now would be a huge understatement. In 2022, it was valued at about $16 billion, but this year, it’s expected to exceed $50 billion, and forecasters are predicting a value of more than $200 billion by 2030.

Twenty-five years after the release of the GeForce 256, Nvidia is still the king of GPUs, and that has given it a major advantage in the AI chip market — analysts at Citi Research estimate it holds about 90% of the market share and will continue to do so for the next two to three years.

This dominant position fueled a massive run up in Nvidia’s stock price in the last year, which has made it one of the most valuable companies in the world, with a market capitalization of over $3 trillion.

“With our innovations in AI and accelerated computing, we’re pushing the boundaries of what’s possible.”

Jensen Huang

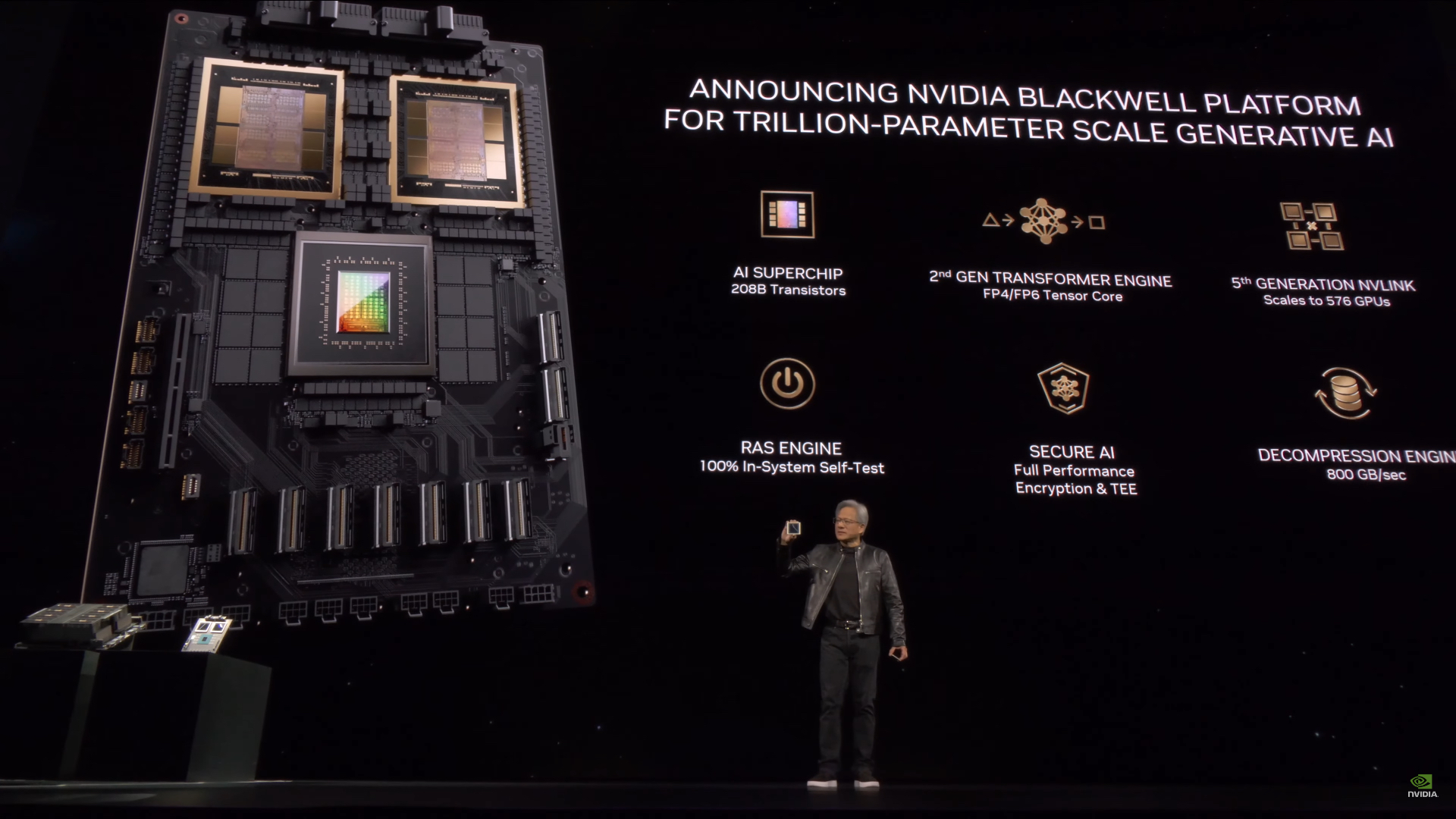

To make sure it stays on top, Nvidia is churning out new GPUs specifically designed to be used as AI accelerators.

In March, CEO Jensen Huang unveiled Blackwell, a new GPU with 208 billion transistors, as well as an “AI superchip” that combines two Blackwell GPUs with a CPU on a single board — Nvidia says this superchip can cut the cost and energy consumption of training and running some generative AIs by up to 25 times compared to its previous best GPU, Hopper.

“Blackwell offers massive performance leaps and will accelerate our ability to deliver leading-edge models,” said Sam Altman, CEO of OpenAI.

Huang then announced in May that Nvidia would be ramping up development so that it can release new AI chips every year, rather than every other year. Then, at COMPUTEX 2024 in June, he revealed Blackwell’s successor, a platform called Rubin (specifics TBD).

“The future of computing is accelerated,” said Huang. “With our innovations in AI and accelerated computing, we’re pushing the boundaries of what’s possible and driving the next wave of technological advancement.”

Nvidia may have a sizable lead, but money is an excellent motivator, and tech companies old and new are striving to end its dominance of the AI chip market — or at least secure themselves a sizable slice of it.

While some of these groups, including AMD, are following Nvidia’s lead and optimizing GPUs for generative AI, others are exploring alternative chip architectures.

Intel, for example, markets field programmable gate arrays (FPGAs) — an architecture with reprogrammable circuitry — as AI accelerators. Startup Groq, meanwhile, is developing a brand new kind of AI chip architecture it calls a “language processing unit” (LPU) — it’s optimized for large language models (LLMs), the kinds of AIs that power ChatGPT and other chatbots.

Etched, a startup founded by three Harvard dropouts, also thinks specialization is the best approach to building AI chips, developing Sohu, an application-specific integrated circuit (ASIC) that runs just one type of AI — transformers — very, very well.

“There aren’t that many people that are connected enough to AI companies to realize the opportunity and also crazy enough to take the bet — that’s where a couple 22-year-olds can come in and give it a swing.”

Robert Wachen

Transformers are one of the newest kinds of AI systems, having been introduced by Google researchers in just 2017. They were created to improve AI translation tools, which, at the time, worked by translating each word in a sentence one after another.

Google’s transformer model was able to look at the entire sentence before translating it, and this additional context helped the AI better understand the meaning of the sentence, which led to more accurate translations.

Transformers soon proved to be useful for far more than language translation. They have played a pivotal role in the generative AI explosion of the past few years, putting the big “T” in “ChatGPT” and enabling the creation of AI models that can generate text, images, music, videos, and even drug molecules.

“It’s a general method that captures interactions between pieces in a sentence, or the notes in music, or pixels in an image, or parts of a protein,” Ashish Vaswani, co-author of Google’s transformer paper, told the Financial Times in 2023. “It can be purposed for any task.”

“A general purpose chip is basically a Swiss Army knife — it needs to be good enough at everything, and that requires being not world-class at anything. I would say we’re much more like a steak knife — we only do one thing, and we do it way, way better.”

Robert Wachen

In 2022, Etched’s co-founders decided to put all their chips on transformers (so to speak), betting that they would be important enough to the future of AI that a microchip optimized to run only transformer-based models would be incredibly valuable.

“There aren’t that many people that are connected enough to AI companies to realize the opportunity and also crazy enough to take the bet — that’s where a couple 22-year-olds can come in and give it a swing,” Etched co-founder Robert Wachen told Freethink.

“When we started, this was incredibly crazy,” he continued. “Now it’s only mildly crazy.”

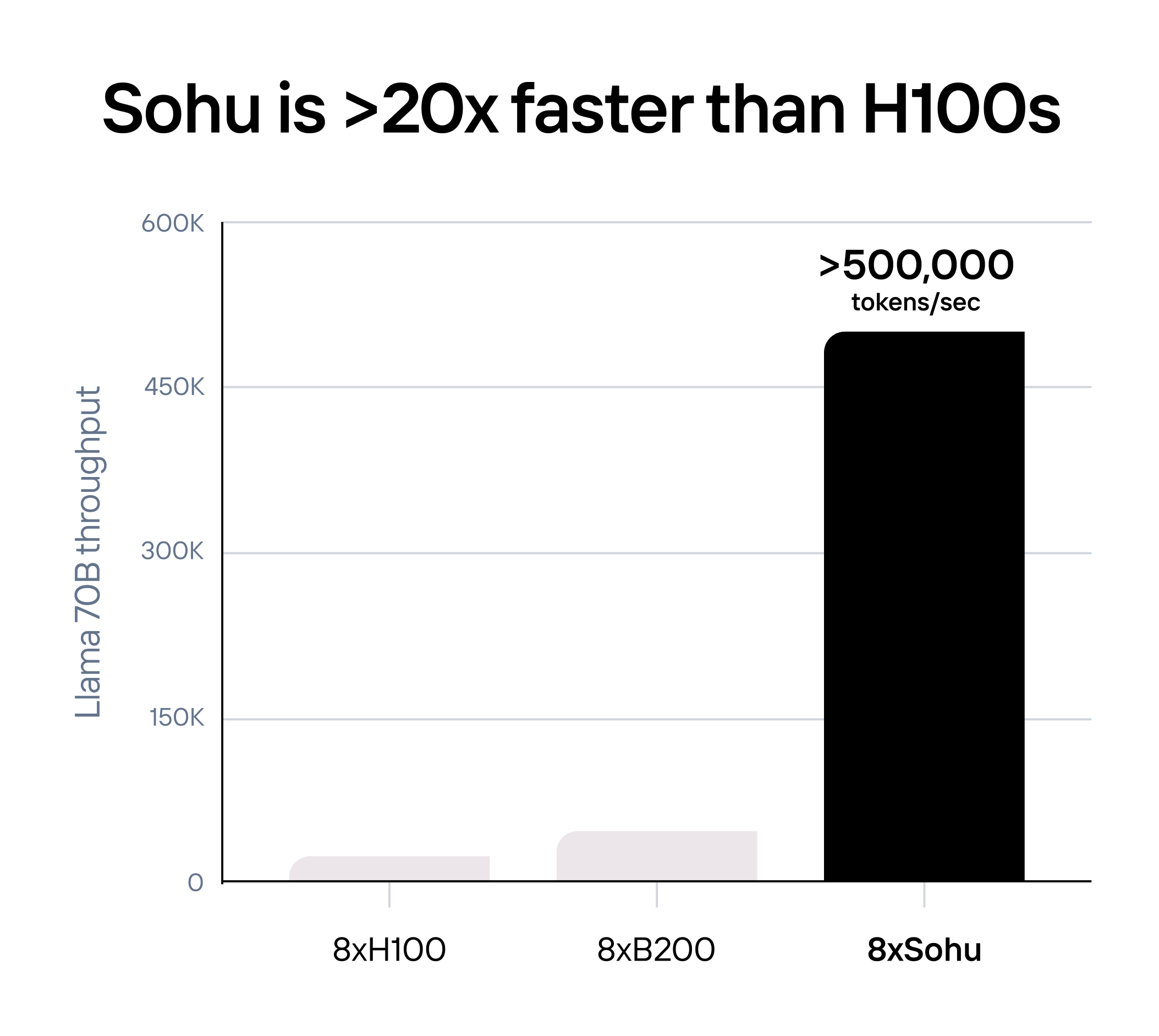

Because Sohu only has to support one type of AI, Etched could cut anything unnecessary for that application, and this streamlining led to efficiency gains — according to Etched, a server with eight Sohu chips could replace 160 of Nvidia’s Hopper GPUs doing the same task.

“A general purpose chip is basically a Swiss Army knife — it needs to be good enough at everything, and that requires being not world-class at anything,” said Wachen. “I would say we’re much more like a steak knife — we only do one thing, and we do it way, way better, but we’re going to be much less flexible.”

That inflexibility could be the startup’s pitfall. If transformers fall out of favor — or if someone beats Etched to market with a transformer-specific AI chip — it could kill the company.

“People have been burned badly by specializing before,” said Wachen. “You could build something that’s 20 times better at a use case that nobody wants because by the time your hardware comes out, they’ve moved on to the next thing.”

Etched is moving fast, though — it recently raised $120 million in a funding round that included PayPal CEO Peter Thiel and Replit CEO Amjad Masad, and that money will allow it to start production of Sohu chips later this year.

Wachen says the startup already has contracts worth tens of millions of dollars for that first production round, too, with customers including AI companies, cloud platforms, and others.

“The people that are generally excited enough about our hardware to take a leap are people that are just bottlenecked by today’s hardware,” said Wachen. “They know that the next generation of Nvidia GPUs is going to be better, but not good enough.”

We’d love to hear from you! If you have a comment about this article or if you have a tip for a future Freethink story, please email us at [email protected].