Why artists shouldn’t fear AI

In 2015, artificial intelligence started doing something strange and magical. It started creating images based on nothing but text input. One of the earliest examples was created from the words “a stop sign is flying in blue skies.” This crude series of images were some of the results:

I’m assuming these were the better ones too. They don’t look like much but they are nonetheless awesome. These are new and unique images created from nothing but a simple text description. Before this, software generally behaved in a very linear way. You directed it to do a specific thing and it did precisely that. These balloon images required something more amorphous, something that feels more like thought. This technology came to be known as image generation.

In 2021, image generation made a huge leap with a system from OpenAI called DALL-E. (The weird name is a combination of the names of artist Salvador Dali and the Pixar robot WALL-E. DALL-E was actually an outgrowth from the now-famous GPT.) A signature series of images from DALL-E were generated with the prompt “an armchair in the shape of an avocado.”

Quite suddenly, AI images were fairly comprehensible. And even more importantly, the AI combined ideas, like the various qualities of avocados with different types of chairs. Combining ideas together like this is one of the defining characteristics of creativity.

In the span of six years, image generation AI went from the level of a child to that of a mediocre art student. But the truly huge leap would come one year later.

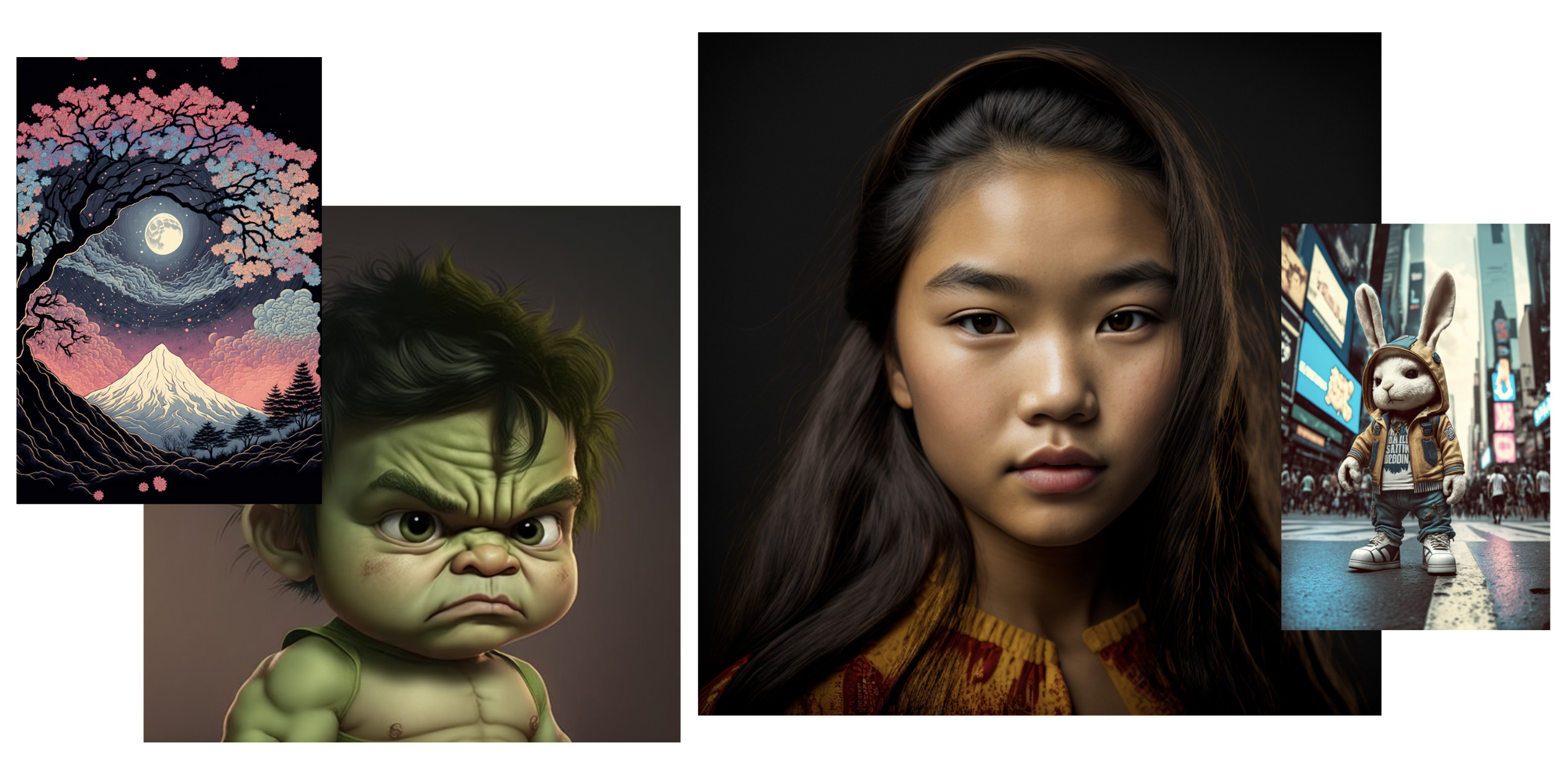

2022 is when image generation reached something like human parity and the AI hype train truly left the station. There were now three popular image generators—DALL-E, Stable Diffusion, and Midjourney—and they were making images like the ones below. No human hand drew a stroke here. These were entirely created with text prompts.

AI-generated art is now very, very good. Only the most elite artists are better, and even they can’t match AI in some areas, like fine details that are tedious and laborious to render by hand.

AI art still has weaknesses. Generating realistic-looking hands has proven particularly difficult. They’re often drawn backwards, sometimes with three fingers or seven or none. This has improved recently, but remains imperfect.

I’m nitpicking, so let me be clear: This is amazing. Just a few years ago this quality of AI art was pure science fiction. AIs are not only creating art, they’re doing it with beauty, stunning versatility, and even subtlety.

AI has had similarly epochal breakthroughs in text generation (GPT, Google Bard) and coding (GitHub Copilot), but it’s AI art that has sparked the fiercest debate and generated anger and fear in the art community.

On artist portfolio sites there have been large, self-organized protests where countless users simultaneously posted a “NO AI” image.

In a widely seen video essay, the artist Steven Zapata describes a grim future in which human beings are entirely removed from the creative process and popular culture is pumped out by machines owned by tech behemoths. Zapata claims AIs “will produce novels, essays, and scripts in amounts that can fill the library of Babel, each piece a composite of half quotations and unattributed swipings.”

The anxieties of the art community have been triggered by something genuinely profound: machines have breached a sacred realm we thought was solely the domain of people.

The first battleground of The Age of AI is art.

Let’s all take a breath

The AI hype inferno is raging out of control right now, and these narratives are just as often utopian as they are dystopian. I’m going to heave a few much-needed buckets of water on this wildfire.

My focus will be on the world of creativity, a world I know well and have studied for many years through my video series Everything is a Remix. Many of my friends and colleagues are filmmakers, designers, illustrators, writers, and photographers. Right now, people from these fields are deeply afraid and often angry. This existential dread seems out of proportion with reality.

Why believe me? I’m not an AI expert, just a lover of technology who uses these tools. (And yes, I find them very exciting, very fun, and definitely concerning, more for their incompetence than their competence.)

I’m as trustworthy as anyone else because, as you will soon see, the experts are too often high on their own fumes. Another research topic of mine has been conspiracy theories, so I’m well acquainted with our human tendency to—I’ll put it politely—get carried away. AI’s thought leaders have gotten carried away, fantasy and reality have blended, and this has convinced many, including many artists, that the robot takeover is imminent. My guess (yes, I’m guessing too but at least I’m admitting it) is that most creative work is safe for many years to come.

The Singularity is not near

Many artists fear that AI’s rocketing abilities will soon go beyond image generation and expand into all realms of creativity, including areas like writing and creative direction. (This is the argument of Steven Zapata’s essay.) This is an entirely sensible take. After all, it’s what the visionaries of artificial intelligence are telling us.

AI’s leading thinkers envision a near future in which thinking machines become as smart as humans. In their telling, the next major milestone is not, as one might expect, mouse-level intelligence or even crow-level intelligence. We’re skipping all that. Next floor: human-level intelligence. This human parity has been dubbed artificial general intelligence, or AGI, and many brilliant people, like Google DeepMind’s Demis Hassabis, think it will be reached within a couple of decades. Artists find this tale of human irrelevance frightening. AI fans, for some reason, love it and can’t wait for AGI’s arrival.

Why do I think we shouldn’t believe the geniuses of AI? Because similarly brilliant people have been making similar predictions for as long as there has been artificial intelligence and their track record is an unbroken chain of hopelessly wrong, utterly naïve predictions.

The leading minds of AI are brilliant wizards indeed, but they are (at least for now) still people, and are subject to the same human proclivities for overconfidence and irrational optimism as the rest of us.

In particular, we humans love a Coming Great Event, whether it’s the Rapture of Christian evangelicals, The Storm of QAnon, the info-utopia that early-internet storytellers foresaw, or, most recently, the Metaverse. Remember that one?

AI’s top minds clearly love a Coming Great Event, too. They’ve been claiming human-level machine intelligence is almost here since the fifties. Many of the pioneers of artificial intelligence, towering figures like Herbert Simon, Marvin Minsky, and John McCarthy, predicted that machines would match human intelligence by about the eighties. What we actually got were things like the first Macintosh. Pretty cool, but very, very, very far away from a thinking machine. Like light years.

But surely, more recent predictions that are based on more sophisticated technology have fared better, right? No, they have not.

A couple of these predictions targeted right now as the time AGI would emerge. Shane Legg, the co-founder of Google DeepMind, said it would be “the mid-2020s.” Meta CEO Mark Zuckerberg said their goal was 2025. AI development is certainly moving fast but GPT is nowhere near becoming a non-psychotic HAL 9000.

The long-reigning king of dark tech prophecy is Ray Kurzweil. He’s spent decades predicting the arrival of “the Singularity,” a kind of sci-fi rapture, a period of rapid and incomprehensible acceleration that includes AGI and basically all other realms of technology. And his date for the arrival of AGI is right around the corner. In the documentary Transcendent Man, Kurzweil said, “I’ve set the date 2029, a machine, an AI, will be able to match human intelligence and go beyond it.”

Six years is a while but it’s not that far off. The state of the art in AI, GPT, remains prone to fabrications, hallucinations, and weird nonsense. And much of what it does is actually not that impressive. Many of the responses I’ve gotten from it are bland rewordings of Wikipedia. Again, let me be clear: GPT is incredible, it just doesn’t seem anywhere near becoming an AGI.

Hey, maybe Kurzweil will get the last laugh, but it seems unlikely that the Singularity is as near as 2029.

Predictions about the Coming Great Event have been plentiful, they have been wrong, and I wouldn’t wager on 2029 coming through. There are plenty of AI experts who agree that AGI is nowhere in sight.

The cognitive scientist Gary Marcus is among the most vocal and energetic of AI critics. He’s agnostic about when AGI will arrive, but he’s highly skeptical that our current path will lead there. Marcus recently referred to our current route as “building ladders to the moon.”

Erik J. Larson, the author of The Myth of Artificial Intelligence, also argues that current AI technologies are not going to lead to AGI. Larson said, “Any foreseeable extension of the capabilities that we currently have do not result in general intelligence. Just point blank. They just don’t.” Larson claims AGI would require innovations that do not exist and it’s impossible to predict when—or if—they will arrive.

Oren Etzioni, an esteemed figure in the field of AI, has made clear that predictions about the arrival of AGI are a fool’s errand. At a 2017 conference, Etzioni said, “My answer to when is, take your estimate, double it, triple it, quadruple it. That’s when.”

Matter of fact, expert projections on the arrival of AGI range from now … to never. In other words, they don’t know.

Derivative by design, inventive by chance

I’ve taken some time to explain how dubious the narrative of imminent AGI is because AGI casts an immense, gloomy shadow over the public imagining of where things are headed. Artists are feeling this pain most intensely because their skills are among the first to be rivaled. Many creatives think human-level artificial intelligence is not far off, which would presumably mean the extinction of pretty much all creative work. The dubious narrative of impending AGI is driving many artists to despondency.

Some might counter that things are plenty scary without making any predictions about AGI. AI art is good enough right now and it’s going to keep improving. That’s a huge problem on its own, no superintelligence needed.

This is a far better argument, but we’ve already been down roads like this. Skilled workers have been getting replaced by machines for decades. Website programmers were replaced by services like Squarespace. Software programmers were replaced by low-code or no-code applications. Photographers and videographers were replaced by high-quality cameras on our phones. And yet, people continue doing these jobs. All these industries are still viable. Matter of fact, making art has been getting cheaper, faster, and easier since the printing press, which displaced an entire class of monks who painstakingly hand-copied books with quill and parchment.

AI art will certainly proliferate on the low end, but it’s unclear if it can meet the demands of advertising agencies, production companies, and game developers. Something often seems missing from AI art. The images lack storytelling because AIs don’t know what that is. They lack expressiveness because the AI doesn’t know what that is. AI art often feels generic, plastic-y.

But even more importantly, AIs do not innovate. They synthesize what we already know. AI is derivative by design and it is inventive by chance. As Gary Marcus wrote on Substack, “GPT’s regurgitate ideas; they don’t invent them.” (Under the hood, all these AI systems operate the same way as GPT.)

Computers can now create but they are not creative. To be creative you need to have some awareness, some understanding of what you’ve done. AIs know nothing whatsoever about the images and words they generate. (Hat tip here to Melanie Mitchell, who expressed this idea about awareness in her excellent book, Artificial Intelligence: A Guide for Thinking Humans.)

Most crucially, AIs have no comprehension of the essence of art: living. AIs don’t know what it’s like to be a child, to grow up, to fall in love, to fall in lust, to be angry, to fight, to forgive, to be a parent, to age, to lose your parents, to get sick, to face death. This is what human expression is about. AIs do not get it. And they’re not going to in any future that is realistic.

Art is the voice of a person. And whenever AI art is anything more than aesthetically pleasing, it’s not because of what the AI did. It’s because of what a person did. Art is by humans, for humans.

***

This article is based on an episode of Ferguson’s Everything is a Remix series, originally published on YouTube.