Australian researchers have used the “wonder material” graphene to develop a sensor that could enable anyone to control robot technology with their minds.

“The hands-free, voice-free technology works outside laboratory settings, anytime, anywhere,” said co-developer Francesca Iacopi. “It makes interfaces such as consoles, keyboards, touchscreens, and hand-gesture recognition redundant.”

The challenge: Brain-computer interfaces (BCIs) are systems that translate brain activity into commands for machines, usually for medical reasons. A person with a limb amputation can use one to control a prosthetic with their mind, while someone with paralysis could use a BCI to “type” words on a computer screen just by thinking about them.

BCIs have plenty of potential non-medical applications, too — one day you might use one to control your smartphone with your thoughts or even supercharge your intelligence by “merging with AI,” as Elon Musk, CEO of brain tech company Neuralink, has suggested.

“It makes interfaces such as consoles, keyboards, touchscreens, and hand-gesture recognition redundant.”

Francesca Iacopi

The problem is that reading brain signals at the level needed for any complex BCI is hard to do right now without either surgically implanting tech into a person’s skull (which is dangerous and invasive) or fitting them with an external headset containing “wet” sensors.

The problem with wet sensors is that they’re covered in gels that can cause skin irritation or prevent the sensors from staying in place. The gel eventually dries up, too, meaning there’s a time limit on how long the sensors work.

What’s new? Backed by funding from Australia’s Defense Innovation Hub, researchers at the University of Technology Sydney (UTS) have now developed an effective “dry” sensor made from silicon and graphene, a carbon-based material just one atom thick.

Graphene was thought to be an attractive material for dry sensors already, but it has been held back by durability and corrosion issues — the addition of a silicon substrate allowed the UTS team to overcome those limitations.

“We’ve been able to combine the best of graphene, which is very biocompatible and very conductive, with the best of silicon technology, which makes our biosensor very resilient and robust to use,” said Iacopi.

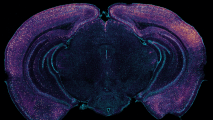

Obedience training: To test their dry sensors, the UTS researchers built them into a BCI featuring an augmented reality headset programmed to display multiple squares of light.

Each square is linked to one of six different commands for a robot dog. To issue a certain command, the wearer just concentrates on the corresponding square — the dry sensors placed behind their ear read their brain activity and send the order to the robot dog.

When the researchers teamed up with the Australian Army to demonstrate this system, the correct command was issued with an average accuracy of 94%.

“We are exploring how a soldier can operate an autonomous system — in this example, a quadruped robot — with brain signals, which means the soldier can keep their hands on their weapon,” said Kate Tollenaar, the project lead with the Australian Army.

Looking ahead: The dry sensors still weren’t as accurate as wet ones, but the difference was small enough that the researchers believe the tech would be able to mostly close the gap in a headset with more precise sensor placement and pressure.

If that’s true, the system could put us a step closer to noninvasive BCIs for myriad applications, both on and off the battlefield.

“The potential of this project is actually very broad,” said Damien Robinson, who demonstrated the BCI for the Army. “At its core, it’s translating brain waves into zeros and ones, and that can be implemented into a number of different systems. It just happens that in this particular instance we’re translating into control through a robot.”

We’d love to hear from you! If you have a comment about this article or if you have a tip for a future Freethink story, please email us at [email protected].