This article is an installment of Future Explored, a weekly guide to world-changing technology. You can get stories like this one straight to your inbox every Saturday morning by subscribing here.

It’s 2030. You wake up, and instead of reaching for your phone, you pop on a pair of sleek smart glasses. Throughout the day, you converse with a powerful AI assistant that uses the specs to pepper your view with useful information, seamlessly merging your physical and digital worlds.

The smart glasses era

In the 1990s, mobile phones could make calls or text, and PCs (with a modem) could send email, surf the internet, or play games. The following decade combined them and gave birth to the smartphone — changing practically everything about how we navigate the world.

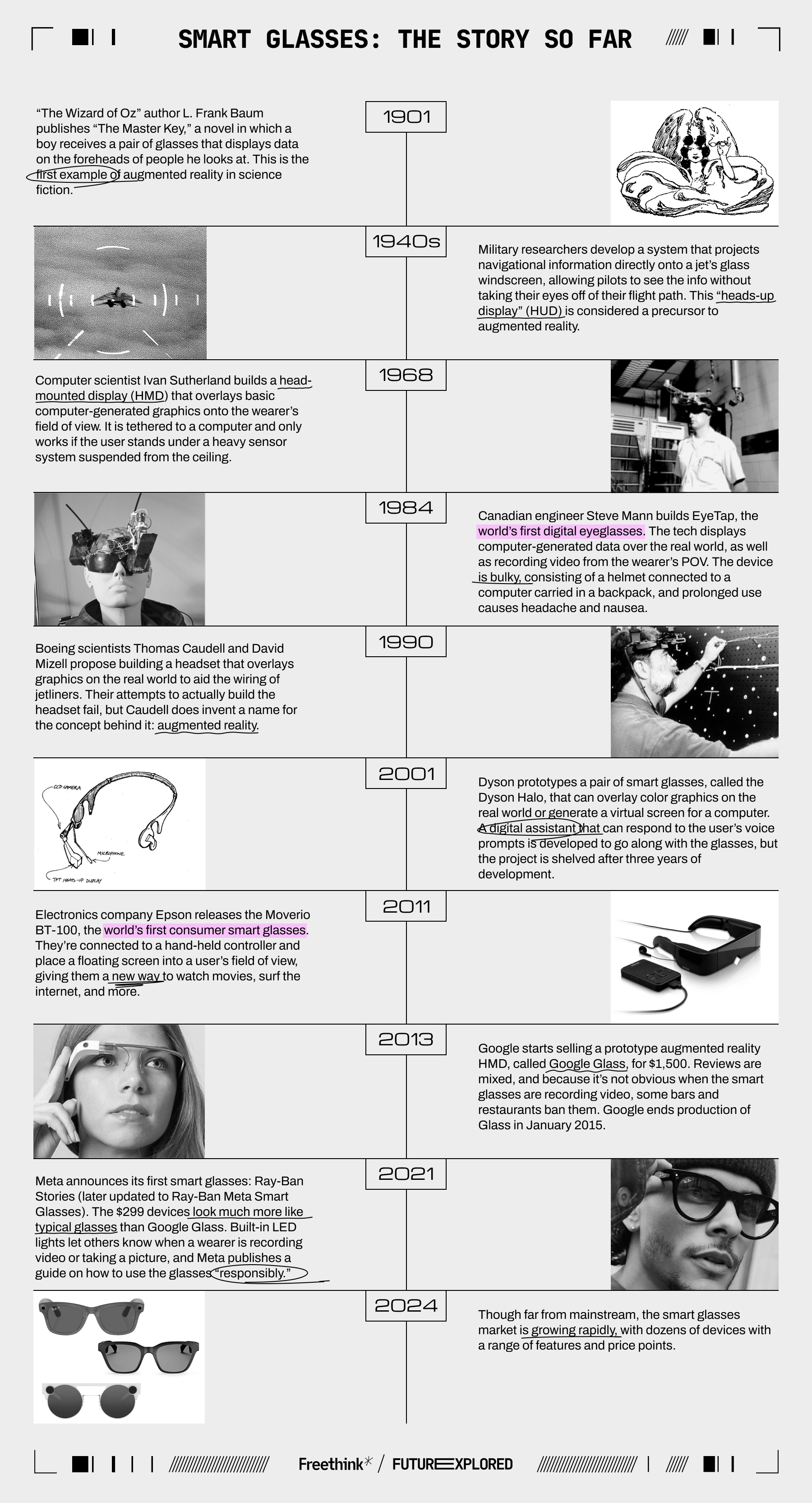

Smart glasses that combine personal computing, AI, and augmented reality could be the next life-changing consumer tech device. To find out how, let’s take a look back at the history of smart glasses and the trends that could lead us into a future where our tech literally alters how we see the world.

Where we’ve been

Where we’re going (maybe)

Google Glass may have been an expensive failure for Google, but it was a valuable case study for the rest of the tech industry, teaching developers a lot about what people do not want, and those insights are shaping the current crop of smart glasses.

While Google Glass looked like a prop from “The Matrix,” for example, most of today’s smart glasses have a much more low-key design. This helps wearers avoid being stigmatized as modern-day “Glassholes” trying to film others without their consent (even if it doesn’t actually stop this from happening).

While the smart glasses market is growing, there’s still no guarantee the devices will ever become mainstream, let alone replace smartphones as our go-to personal electronics. The industry is still searching for its “killer app,” the feature that people just can’t get without the tech — and this might be the one thing Google got right about smart glasses with Google Glass.

When Epson released its smart glasses in 2011, it marketed the devices as being for “personal media enjoyment” — put on a pair, and you can browse the web or watch movies wherever you are — but Google’s 2012 concept video for Glass emphasized a very different kind of use case.

Rather than providing passive entertainment, these smart glasses were going to be helpful. They’d send you text notifications, help you keep track of your schedule, and overlay useful graphics, such as walking directions, onto your field of view.

The digital assistant in the Google Glass devices that actually shipped was nowhere near as useful — reviewers reported getting frustrated with the buggy software, which often misunderstood what they said or wanted.

Today, far better AI assistants are now a reality, thanks to advances in generative AI.

“It’s easy to envision a future where people could have an expert AI assistant by their side through a phone, glasses, or other next-generation devices.”

Greg Wayne

Since the release of the hugely popular ChatGPT in 2022, seemingly every tech company has started trying to figure out how to use generative AI to create the ultimate digital assistant, and many of these AIs, including Meta’s offering, are already being integrated into smart glasses.

“[S]mart glasses are very powerful for AI because, unlike having it on your phone, glasses, as a form factor, can see what you see and hear what you hear from your perspective,” Meta Founder Mark Zuckerberg told the Verge in 2023.

Google hasn’t given up on the future it predicted in the Glass concept video, either.

In May 2024, Google DeepMind released a demo video in which its in-development AI assistant, Project Astra, is used with a pair of smart glasses that Greg Wayne, research director at Google Deepmind and Project Astra lead, told Freethink are a “functional research prototype” from the company’s AR team.

“Universal AI agents have the potential to positively impact many aspects of day-to-day life, from enabling the visually impaired to translating languages fluidly to providing real-time information about the physical world,” said Wayne.

“Depending on the use case,” he continued, “it’s easy to envision a future where people could have an expert AI assistant by their side through a phone, glasses, or other next-generation devices.”

Project Astra isn’t available to the public yet, and the advanced AI assistants that are available, like Meta AI, aren’t reliable enough to be indispensable — sometimes they’ll return useful answers to your questions, and sometimes they’ll confidently tell you that the potato you’re holding is a smartphone. Often, you won’t really know if it’s right without looking it up on your phone, anyway.

This habit of presenting false information as true, or “hallucinating,” is a major problem with generative AIs, and how to solve it — if it even is solvable — is still TBD.

Even if we do end up with super-advanced AI assistants, there’s no guarantee people will prefer interacting with AI through something new, like smart glasses (or a pin or a bright orange box), over the phones we’re already using.

If we still need to carry around a smartphone in order to engage with the AI — as you do to use the Ray-Ban Meta Smart Glasses — it limits the potential value of carrying around another device.

“The best form factor for advanced AI assistants will ultimately be determined as researchers, designers, users, and the wider field explore different use cases,” said Wayne. “At this stage, it is too early to predict what that hardware will be, but it’s an exciting time for innovation, collaboration, and creativity.”

If today’s AIs don’t end up leading to tomorrow’s uber-helpful digital assistants, smart glasses developers will definitely need to figure out another killer app that gets people buying their devices — which brings us back to Epson and the very first smart glasses.

“To get to that future, where AR/VR headsets eventually replace our everyday devices, we need to make the transition easier.”

Tamir Berliner

While some smart glasses makers are banking on people wanting to use their specs to interact with advanced AIs, others are trying to lure in customers by emphasizing how the glasses can replace screens for both entertainment and work.

The basic technology has come a long way since 2011, and the smart glasses these companies are selling are sleeker, with bigger virtual screens, higher image quality, and more functionality than Epson’s Moverio BT-100s, but they typically aren’t useful on their own — you need additional tech, such as a laptop or a smartphone and a Bluetooth mouse and keyboard.

To make it easier for people to make the leap to using smart glasses over standard screens, tech startup Sightful created the Spacetop, a screen-free laptop that comes with a pair of modified Xreal Air 2 Pro smart glasses.

“We reduced [the glasses’] weight to 84 grams, modernized its design to resemble everyday sunglasses, and … added automatic dimming,” Tamir Berliner, Sightful’s co-founder and CEO, told Freethink. “We also worked with Xreal on modifications for the text rendering pipeline in order to improve text legibility and reduce eye strain, further allowing a full day of productivity.”

The $1900 Spacetop is currently only available for preorder (deliveries are expected to begin in October 2024), so it’s too soon to say whether it’ll be popular enough to start a new trend of smart glasses as part of all-in-one computing systems, but Berliner thinks his company’s approach could help people get comfortable looking at the world from behind high-tech specs.

“To get to that future, where AR/VR headsets eventually replace our everyday devices, we need to make the transition easier,” he told Freethink. “That’s why we believe that Spacetop’s form factor of a laptop keyboard with a spatial ‘screen’ is the next natural evolution of laptops and computers.”

We’d love to hear from you! If you have a comment about this article or if you have a tip for a future Freethink story, please email us at [email protected].